NewsMar | 4 | 2024

Research Spotlight: Entropy Removal of Medical Diagnostics

Shuhan He, MD, a physician investigator in the Department of Emergency Medicine and the Laboratory of Computer Science at Massachusetts General Hospital and an assistant professor of Medicine (Part time), at Harvard Medical School, is the lead author of a recent study published in Scientific Reports, Entropy Removal of Medical Diagnostics.

What Question Were You Investigating?

When I talk with new internal medicine interns rotating in the emergency department, I tell them one thing: “Its a game of incomplete information.” In the emergency department, chaos and uncertainty are constants, and as physicians, we must be adept at navigating them.

I’ve always wondered how we can communicate and prove this was true, that we really do work in a world with uncertainty, and can this chaos be diminished, measured, or even eliminated?

Our informatics research group published a paper that can help provide answers for these questions. Entitled “Entropy Removal in Medical Diagnostics,” it introduces a novel application of 'Shannon entropy' and entropy removal, which are derived from information theory, to emergency medicine1

This methodology proposes a different approach to clinical decision-making in the emergency department based on the reduction of entropy/uncertainty.

Modern machine learning tools actually use this thing called entropy, or uncertainty measurements, and we can apply these tools to our approach in the emergency department to guide us towards quicker information acquisition.

This is a significant potential opportunity to not treat patients based on population statistics, but look at each individual patient’s risk profiles and uncertainty when they walk into the ER. I like to call it personalized data health.

What Methods Did You Use?

Shannon entropy is a measure of uncertainty. It is represented by a mathematical equation that calculates the typical level of information or unpredictability associated with the various possible results of a random event and is used broadly in the fields of digital devices, satellites, and is the underlying technology for our modern digital world.

This concept has been widely adapted in machine learning especially in generative predictive transformers (GPTs) to predict the next best word. In GPTs, the model evaluates the probabilities of various words that could follow a given context, assigning higher probabilities to words that are more likely to occur based on the preceding context. By considering the entropy or uncertainty associated with each prediction, the model can refine its output to provide more accurate and contextually appropriate predictions.2

Similarly, in medicine, when a patient first arrives at the Emergency Department, the level of entropy – that is, the degree of uncertainty or disorder – is at its highest. As the patient undergoes evaluation and diagnostic investigations over time, this entropy gradually diminishes. This decrease in disorder and uncertainty is a result of the accumulating information and insights gained through these assessments, leading to a clearer understanding of the patient's condition.

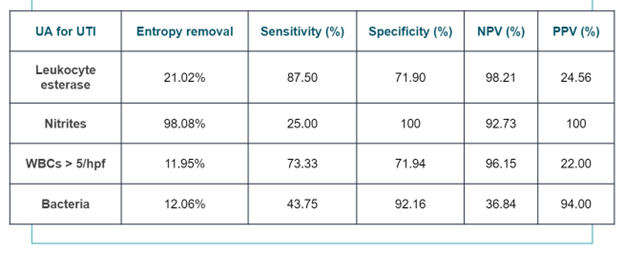

Most of medicine relies on established metrics such as sensitivity, specificity, negative predictive value (NPV), and positive predictive value (PPV) to guide our decisions in clinical scenarios. Sensitivity refers to the ability of a test to correctly identify individuals who have a specific disease or condition (true positives), whereas specificity is the test's ability to correctly identify those who do not have the disease (true negatives).

Positive Predictive Value (PPV) indicates the proportion of positive test results that are truly positive (i.e., the likelihood that someone with a positive test actually has the disease), while Negative Predictive Value (NPV) represents the proportion of negative test results that are truly negative (i.e., the likelihood that someone with a negative test does not have the disease). These measures are crucial in evaluating the effectiveness of diagnostic tests.

These metrics have demonstrated their effectiveness in assessing the reliability and accuracy of medical screening tests when applied to populations of people. However, these metrics do not tell us about the individual patient’s circumstances at one time in an admission to the ER. It's why patients can sometimes feel frustrated that individual circumstances are not being taken into account.

How Entropy and Entropy Removal Applied in the ED?

Our paper calculated the entropy and entropy removal for the different findings in a urine analysis when determining Urinary tract infection. For instance, nitrites showed notably higher entropy removal than other indicators. This means they provided the most information for reaching a diagnosis compared to other metrics.

What are the Implications?

In our daily lives, having a clear understanding of the uncertainty reduction or entropy removal associated with each decision step allows us to make confident and informed decisions. This could potentially allow us to make personalized informed health decisions with analytics for each patient based on new information occurring live in the ER. That would be a huge upgrade from what we generally do, which is to assess risk for each patient based on their history and demographics, which is more of a larger population demographic question.

What are the Next Steps?

Our next step is to analyze Electronic Medical Records (EMR) by treating the various activities recorded in the EMR (like appointments, diagnoses, treatments) as if they were letters in an alphabet. This approach is similar to how Clinical Decision Support Systems (CDSSs) work in healthcare, comparable to Automatic Speech Recognition (ASR) systems in technology.

In ASR systems, speech is broken down into basic sound units (phonemes) to understand and form meaningful sentences. Similarly, in healthcare, CDSSs analyze detailed medical information (like the 'letters' in EMR) to help doctors understand and manage complex medical situations. The CDSS, using its database (akin to a linguistic system), plays a crucial role in guiding clinicians through these complexities, much like constructing sentences from letters in a language. This analogy helps in understanding how data from EMRs can be systematically analyzed and used effectively in medical decision-making.

Paper Cited:

- He S, Chong P, Yoon B-J, Chung P-H, Chen D, Marzouk S, Black KC, Sharp W, Safari P, Goldstein JN, Raja AS, Lee J. Entropy removal of medical diagnostics. Sci Rep. 2024;14:1181. doi:10.1038/s41598-024-1181-x

- Brown, Tom B. et al. “Language Models are Few-Shot Learners.” ArXiv abs/2005.14165 (2020): n. pag.

-

![]()

- Instructor of Medicine

Type

Centers and Departments

Topics

Check out the Mass General Research Institute blog

Bench Press highlights the groundbreaking research and boundary-pushing scientists working to improve human health and fight disease.

Support Research at Mass General

Your gift helps fund groundbreaking research aimed at understanding, treating and preventing human disease.